Welcome to this overview on setting up ESLZ using Terraform and Azure DevOps.

In my previous blog I setup the Terraform ESLZ CAF module, and created some custom landing zones/policies.

In an effort to get this all setup in a DevOps CICD pipeline cycle, I am using Azure DevOps.

Why use DevOps?

In this instance, I want a team to be able to interact with the landing zones, as currently only I can configure anything - and as much as I have no problem with this, it would be best if a team could do this for obvious reasons!

DevOps allows me to deploy this system in an environment that allows us to continually improve our code, automate the deployments (also keep all modules up-to date) and keep everything neat and tidy in a GitHub repo.

What changes have I made?

I have made a number of changes to help facilitate this:

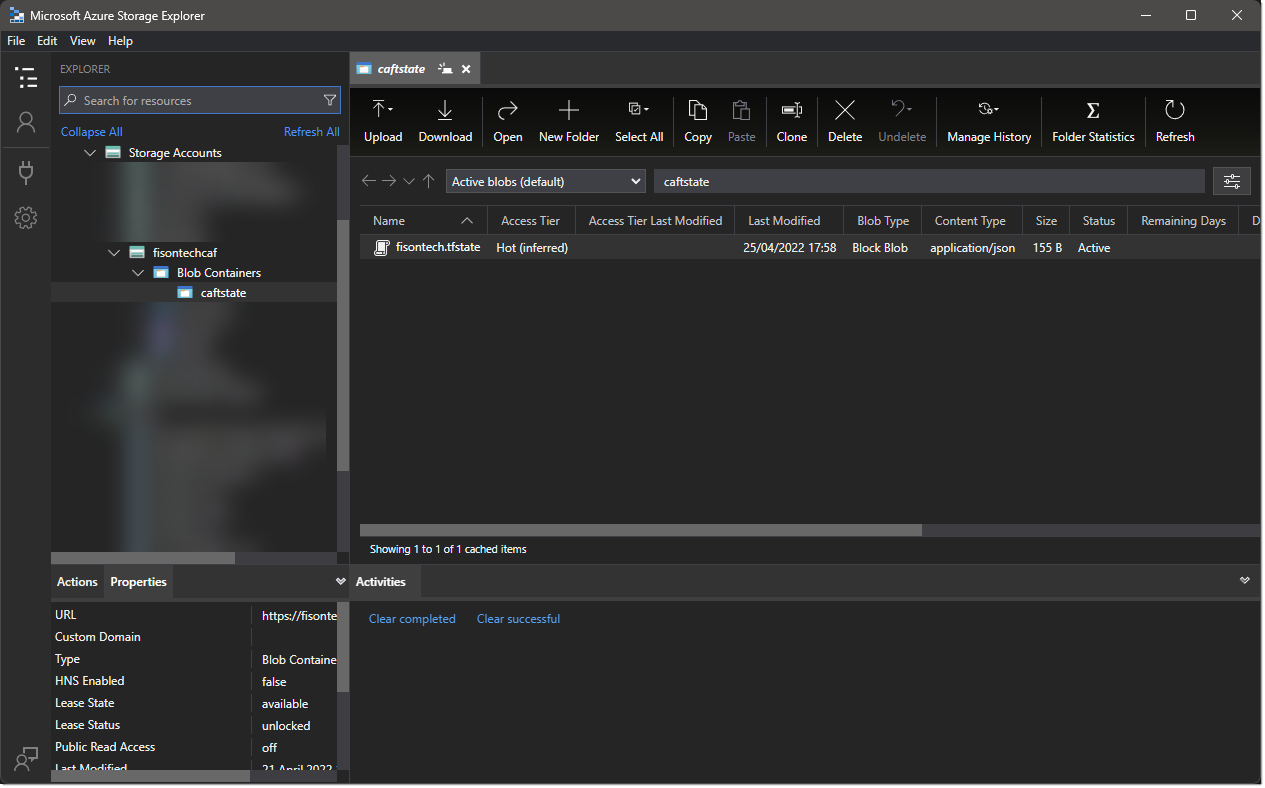

- Terraform State File - Moved my terraform.tfstate file into an Azure storage blob, so anyone with access to the vault in Azure can make changes to the state, but also allows us to use keys in a way that doesnt expose us, as the vault is being referenced itself, and the keys are being pulled with an SPN, this is much more secure than keeping the key in a plain text.

I had to make the following change also to my main.tf file at the top, to relocate the state location, this allows for the state file to be shared among teams.

terraform {

backend "azurerm" {

resource_group_name = "fisontech-caf"

storage_account_name = "fisontechcaf"

container_name = "caftstate"

key = "fisontech.tfstate"

}

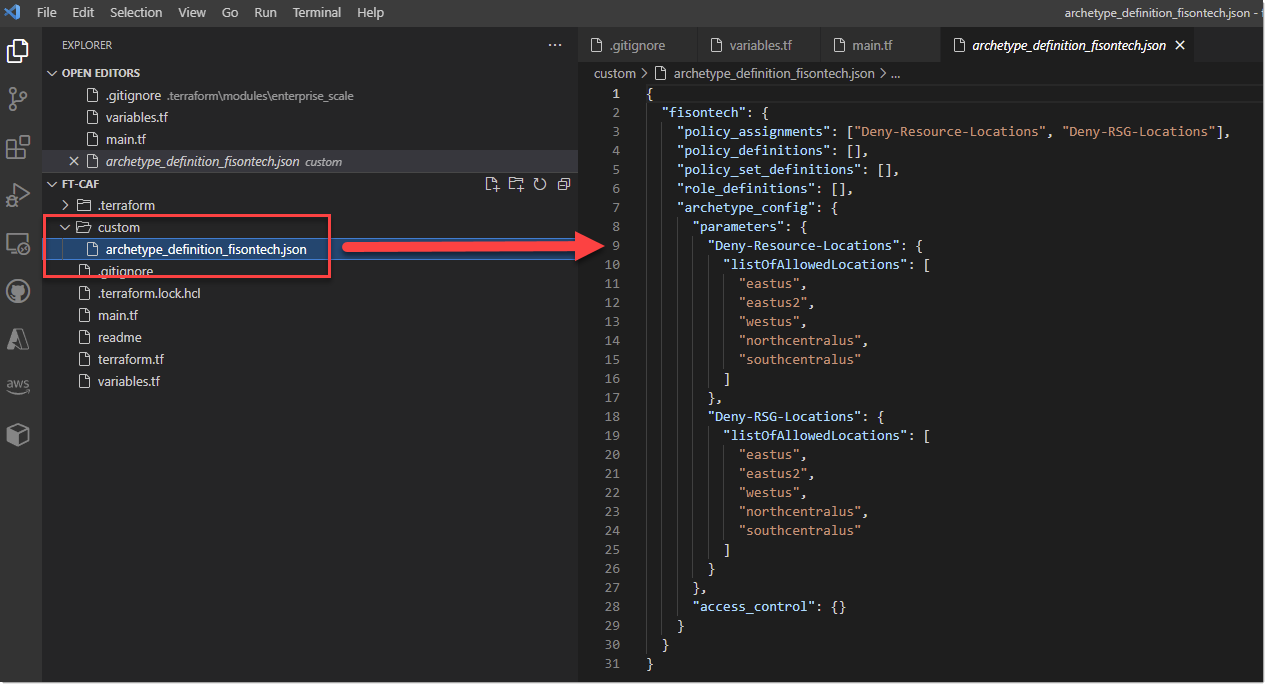

- Custom Archetypes - Created a folder in my GitHub repo that contains all my custom files (so far 1), this is used by devops and allows everyone access into the custom files, rather than just myself as like .tfstate, these .json files are generally within the .terraform folder which isn’t synced into GitHub, so this resolves this issue.

- .gitignore Files - Added a ignore file to the root of my repo, this file was actually within the caf module but doesnt get loaded as it was originally houses in the .terraform folder, so ive moved this up a level so the rules are now used. A file called .gitignore with the below value is added to the root.

# Local .terraform directories

**/.terraform/*

# .tfstate files

*.tfstate

*.tfstate.*

# Lock files

.terraform.lock.hcl

# Crash log files

crash.log

# Ignore any .tfvars files that are generated automatically for each Terraform run. Most

# .tfvars files are managed as part of configuration and so should be included in

# version control.

#

# example.tfvars

# Ignore override files as they are usually used to override resources locally and so

# are not checked in

override.tf

override.tf.json

*_override.tf

*_override.tf.json

# Include override files you do wish to add to version control using negated pattern

#

# !example_override.tf

# Include tfplan files to ignore the plan output of command: terraform plan -out=tfplan

# example: *tfplan*

# Ignore any files with .ignore. in the filename

*.ignore.*

# Ignore macOS .DS_Store files which are generated automatically by Finder.

.DS_Store

# Ignore Visual Studio Code config

**/.vscode/*

# Ignore Super-Linter log

**/super-linter.report

**/super-linter.report/*

**/super-linter.log

DevOps Basics

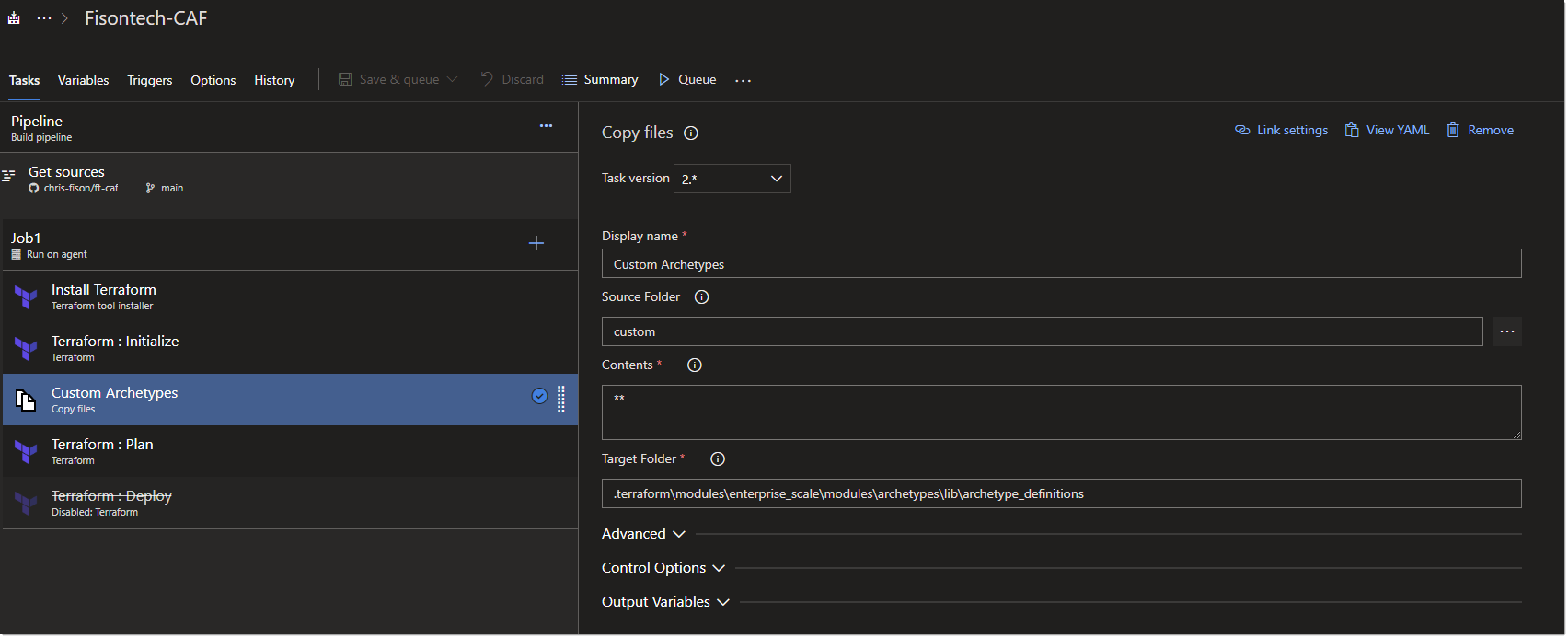

Once these basics were setup, it was then a case of setting up the DevOps CICD, and for the most part I followed MVP John Lunn https://twitter.com/jonnychipz guide which will fill in any gaps https://jonnychipz.com/2021/01/11/deploying-azure-infrastructure-with-terraform-via-azure-devops-pipelines/.

One change I made to Jon’s example as it was needed for my project, is as mentioned earlier use a custom folder step to my pipeline, like the following:

The copy task, essentially will copy all custom files within the custom folder into the location where my custom archetypes lives which is .terraform\modules\enterprise_scale\modules\archetypes\lib\archetype_definitions. Now, consider this is a test environment, and that the custom files for now are for landing zones. So further down the line I will create separate sub folders which contain custom files for identity, management, networking etc.

The reason I created this whole task and moved my custom archetypes was the .terraform folder is excluded from being synced into GitHub, so once I devise a better way of automatically declaring the template path this will change, but for now it works!

Putting it all together DevOps fashion

So how does all this look in principle?

The code gets pulled / cloned from the main GitHub repo that contains my main.tf, variables.tf and terraform.tf, down to VSC.

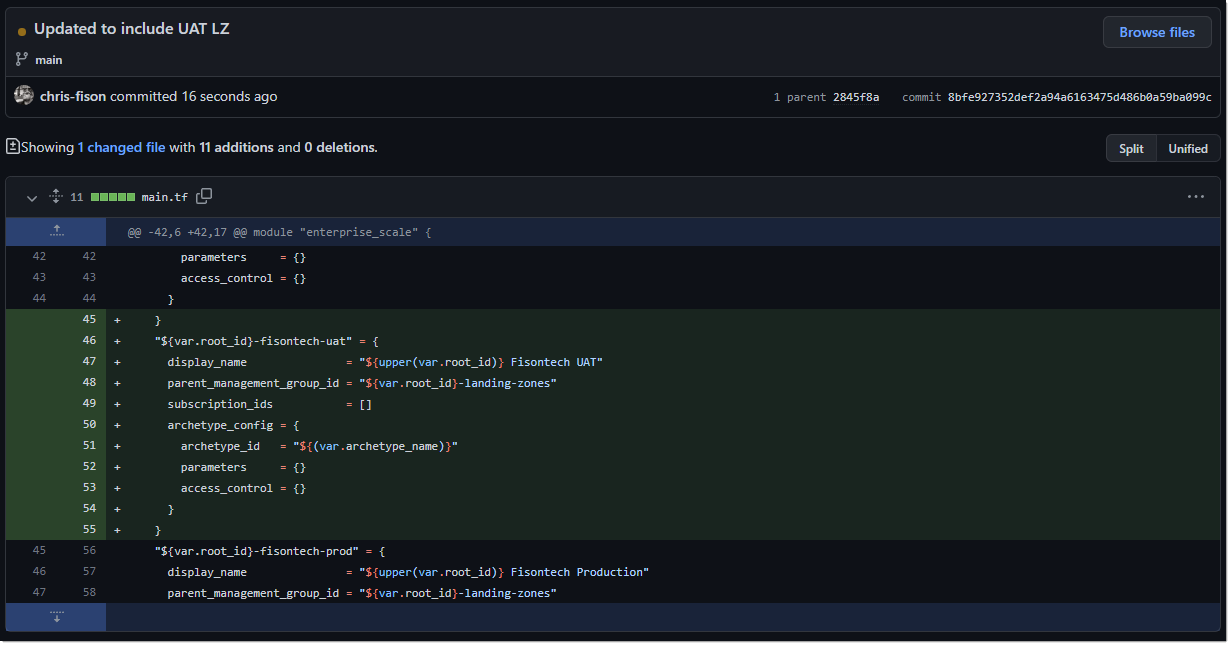

A change is made in VSC to my main.tf file with the additional landing zone:

}

"${var.root_id}-fisontech-uat" = {

display_name = "${upper(var.root_id)} Fisontech UAT"

parent_management_group_id = "${var.root_id}-landing-zones"

subscription_ids = []

archetype_config = {

archetype_id = "${(var.archetype_name)}"

parameters = {}

access_control = {}

}

}

The change is committed into GitHub, we can verify this by checking the code that’s changed.

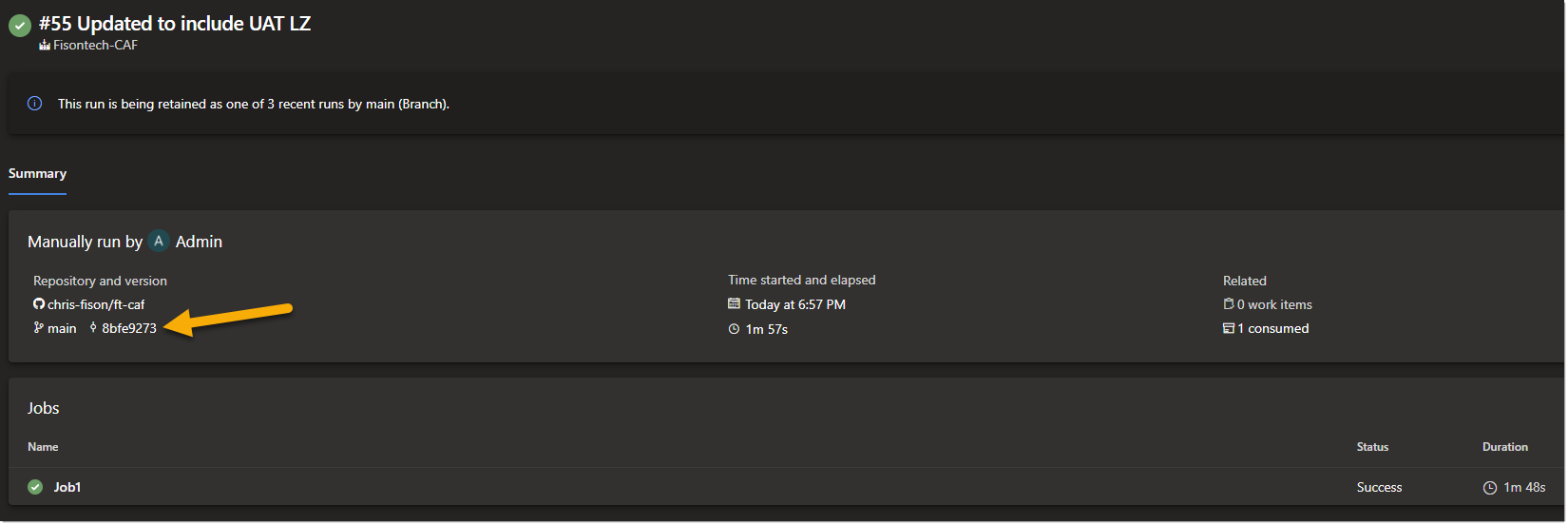

Now we can go into Azure DevOps and kick off a pipeline manually, as I am testing this I am for now just running a plan.

We can see next to the arrow its showing the latest commit value, in this instance the changes I made to the main.tf file with the updated LZ for UAT.

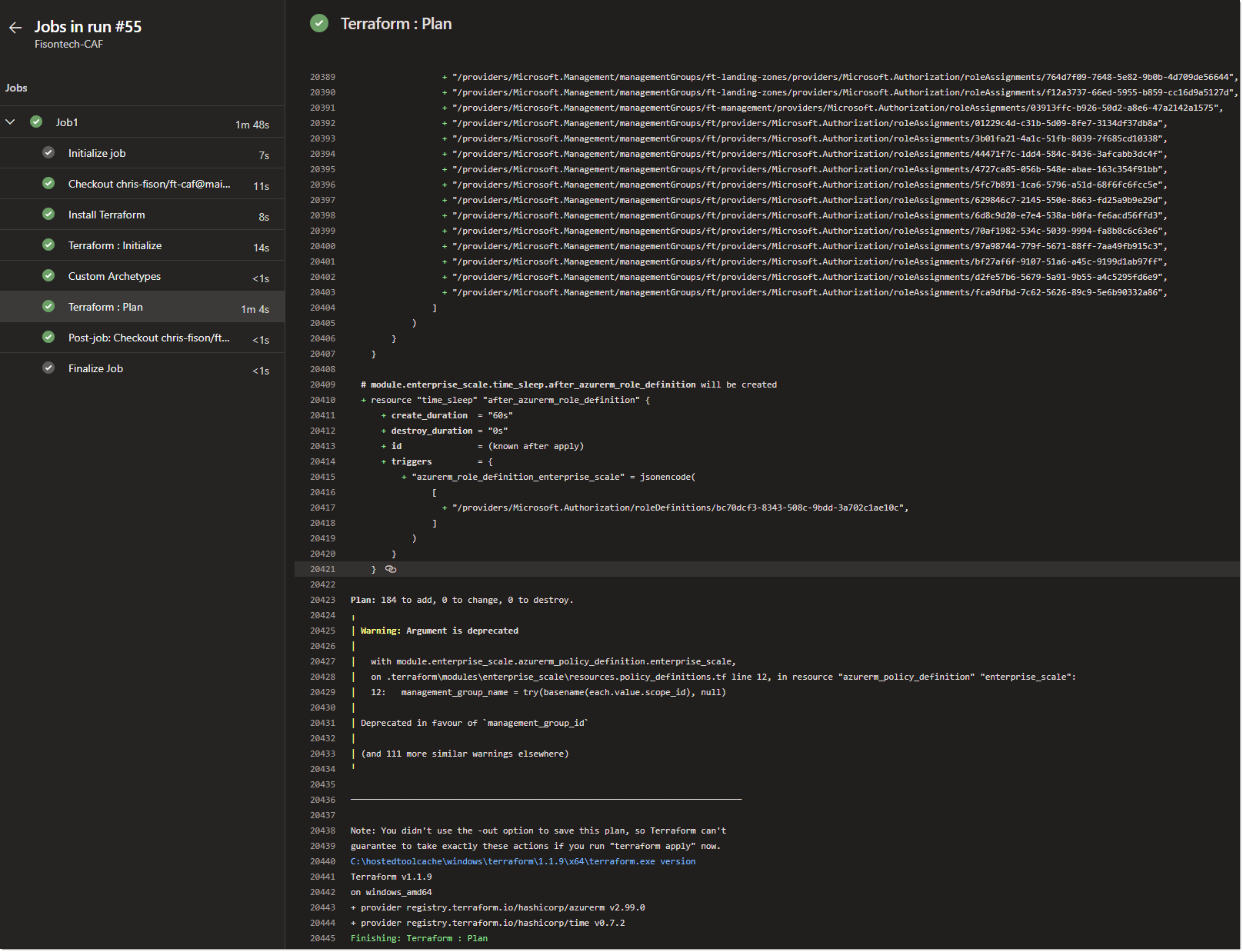

In the above the plan it has finished running. It’s worth noting that I haven’t actually applied anything - so I have updated the pipeline to do this also now.

So now would normally be a good time to get a coffee and come back in a bit, but as its past 1900 GMT… I’ll avoid it!

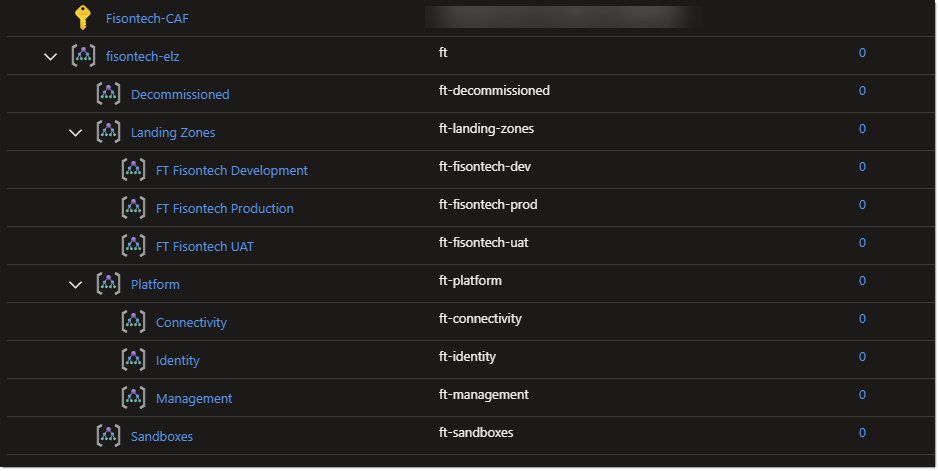

As we can see the deployment finished and here’s our UAT LZ.

However we now need to remove this LZ as its no longer needed and we can use this as an opportunity to show how this is done.

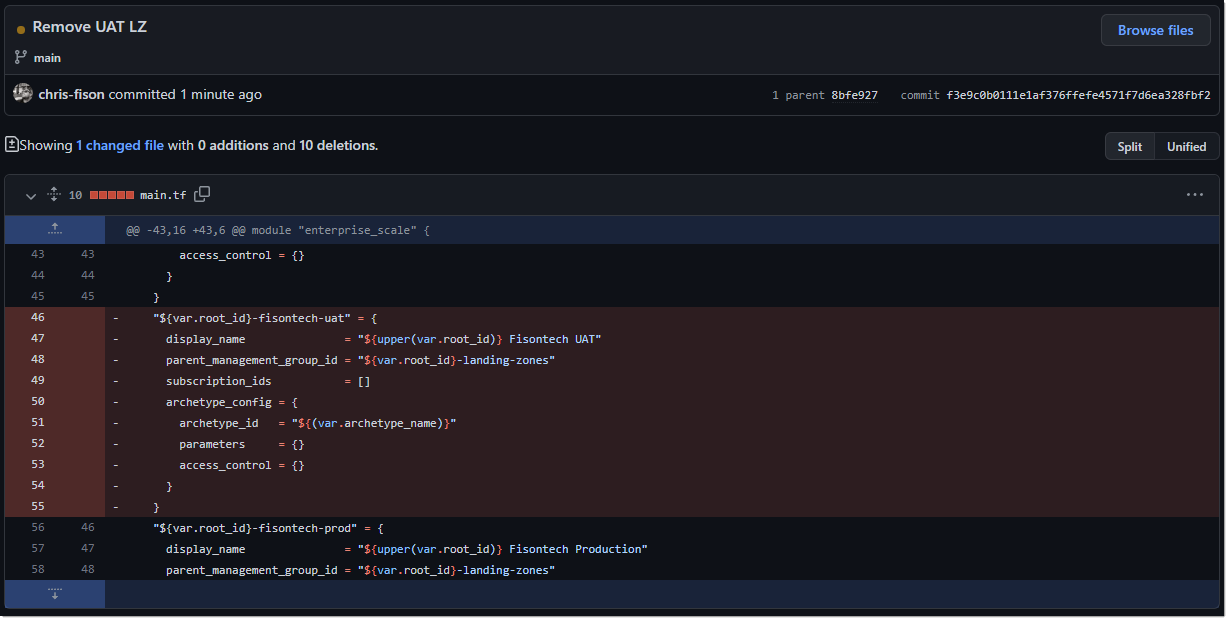

I made a change within main.tf, again checking out the code, and removing the following LZ and checking the code back into GitHub.

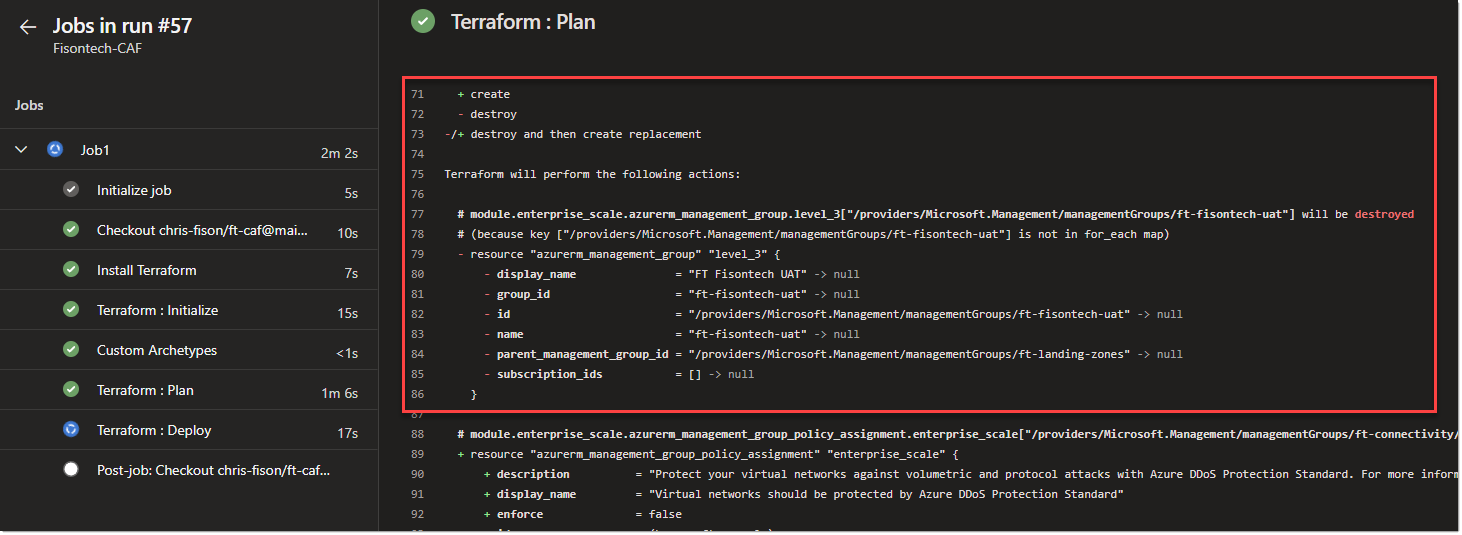

I enabled full CICD within DevOps, so now the code will run automatically (you would add an approval step in production), as you can see the terraform plan picked up on the fact that the LZ needed removing.

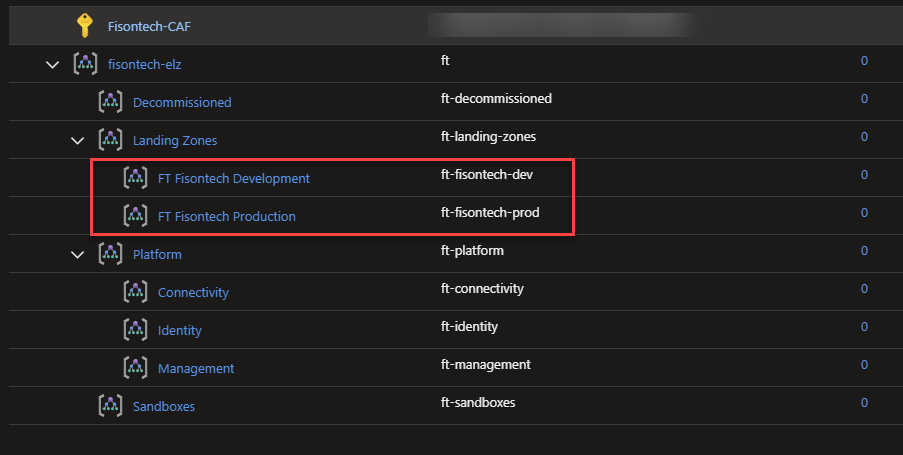

Once the apply finished, we can check the portal and see the LZ has been removed.

Final notes

I hope this blog was interesting, please give me a shout on LinkedIn if you’d like to discuss anything Azure!